By no means Changing Conversational AI Will Eventually Destroy You

페이지 정보

본문

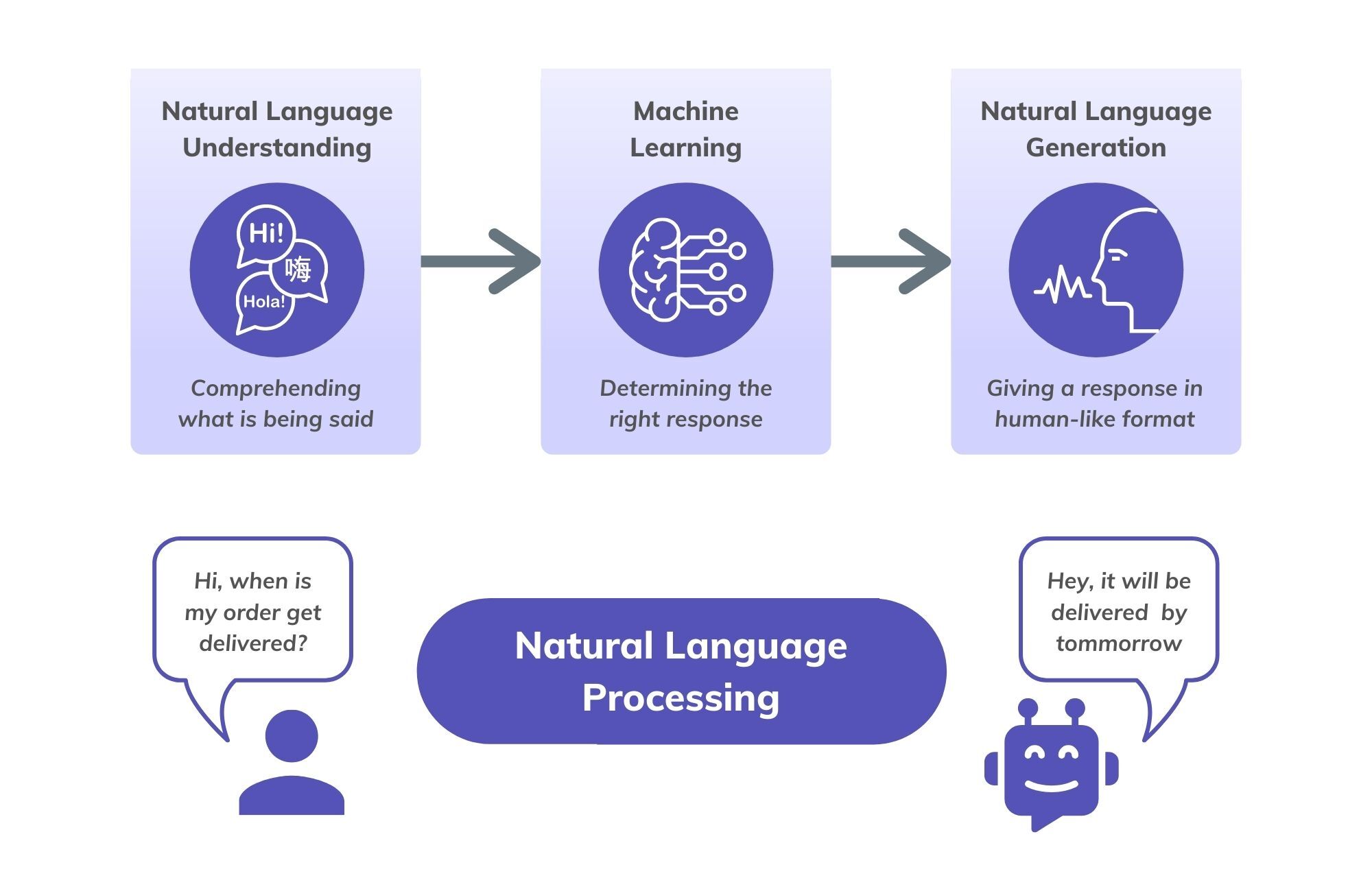

KeyATM allows researchers to use key phrases to kind seed topics that the model builds from. Chat Model Route: If the LLM deems the chat mannequin's capabilities enough to address the reshaped query, the query is processed by the chat model, which generates a response based on the conversation historical past and its inherent knowledge. This determination is made by prompting the LLM with the user’s query and related context. By defining and implementing a call mechanism, we are going to determine when to rely on the RAG’s information retrieval capabilities and when to reply with extra casual, conversational responses. Inner Router Decision - Once the question is reshaped into a suitable format, the interior router determines the suitable path for acquiring a complete reply. They may have hassle understanding the consumer's intent and offering a solution that exceeds their expectations. Traditionally, benchmarks focused on linguistic duties (Rajpurkar et al., 2016; Wang et al., 2019b, a), however with the current surge of more succesful LLMs, such approaches have change into obsolete. AI algorithms can analyze information sooner than people, allowing for more knowledgeable insights that assist create authentic and significant content material. These sophisticated algorithms allow machines to grasp, generate, and manipulate human language in ways in which were once thought to be the exclusive area of humans.

By taking advantage of free entry choices immediately, anyone involved has an opportunity not solely to study this expertise but in addition apply its benefits in significant ways. The very best hope is for the world’s leading scientists to collaborate on ways of controlling the technology. Alternatively, all of those functions can be used in a single chatbot since this expertise has limitless business use circumstances. At some point in 1930, Wakefield was baking up a batch of Butter Drop Do cookies for her visitors at the Toll House Inn. We designed a conversational move to find out when to leverage the RAG software or Chat GPT mannequin, utilizing the COSTAR framework to craft effective prompts. The dialog circulation is an important component that governs when to leverage the RAG software and when to depend on the chat model. This weblog put up demonstrated a simple approach to rework a RAG mannequin right into a conversational AI device using LangChain. COSTAR (Context, Objective, Style, Tone, Audience, Response) offers a structured strategy to immediate creation, making certain all key points influencing an LLM’s response are thought-about for tailor-made and impactful output. Two-legged robots are difficult to steadiness correctly, however humans have gotten higher with follow.

In the rapidly evolving landscape of generative AI, Retrieval Augmented Generation (RAG) models have emerged as powerful tools for leveraging the huge knowledge repositories available to us. Industry Specific Expertise - Depending on your sector, choosing a chatbot with specific data and competence in that topic will be advantageous. This adaptability enables the chatbot to seamlessly integrate with what you are promoting operations and suit your objectives and goals. The advantages of incorporating AI software program functions into business processes are substantial. How to connect your existing enterprise workflows to highly effective AI fashions, with no single line of code. Leveraging the ability of LangChain, a robust framework for building applications with large language fashions, we will convey this vision to life, empowering you to create really superior conversational AI instruments that seamlessly blend knowledge retrieval and pure language understanding AI interplay. However, simply constructing a RAG model shouldn't be enough; the true problem lies in harnessing its full potential and integrating it seamlessly into real-world functions. Chat Model - If the internal router decides that the chat mannequin can handle the query successfully, it processes the question based on the conversation history and generates a response accordingly.

Vectorstore Relevance Check: The interior router first checks the vectorstore for related sources that would doubtlessly answer the reshaped query. This method ensures that the internal router leverages the strengths of each the vectorstore, the RAG utility, and the chat mannequin. This weblog put up, part of my "Mastering RAG Chatbots" sequence, delves into the fascinating realm of remodeling your RAG model into a conversational AI assistant, appearing as an invaluable device to answer consumer queries. This utility utilizes a vector store to search for relevant data and generate a solution tailor-made to the user’s question. Through this submit, we are going to discover a simple but useful strategy to endowing your RAG utility with the flexibility to interact in natural conversations. In easy terms, AI is the flexibility to train computer systems - or currently, to program software methods, to be extra particular - to observe the world round them, gather data from it, draw conclusions from that data, after which take some kind of action based on these actions.

Vectorstore Relevance Check: The interior router first checks the vectorstore for related sources that would doubtlessly answer the reshaped query. This method ensures that the internal router leverages the strengths of each the vectorstore, the RAG utility, and the chat mannequin. This weblog put up, part of my "Mastering RAG Chatbots" sequence, delves into the fascinating realm of remodeling your RAG model into a conversational AI assistant, appearing as an invaluable device to answer consumer queries. This utility utilizes a vector store to search for relevant data and generate a solution tailor-made to the user’s question. Through this submit, we are going to discover a simple but useful strategy to endowing your RAG utility with the flexibility to interact in natural conversations. In easy terms, AI is the flexibility to train computer systems - or currently, to program software methods, to be extra particular - to observe the world round them, gather data from it, draw conclusions from that data, after which take some kind of action based on these actions.